Research-driven design for GenAI bias reporting.

Role

UX Design Lead

UX Researcher

Duration

August 2024-December 2024

What I Did

Storyboarding/Speed Dating

Think-Aloud Testing

Usability Testing

Metrics Evaluation

UX Flows

Rapid Prototyping

Team

Riya Mody (Project Manager)

Nikita Kulkarni (UX Research Lead)

Jasmine Kim (Visual Design Lead)

At-a-Glance

As part of CMU's User-Centered Research and Design course, I collaborated with a team to explore how everyday users could better report AI bias.

While our team collaborated on research and concept development, I spearheaded the design execution - creating all wireframes and prototypes in Figma and iterating on our interface based on research findings.

This academic project, while using ChatGPT as a case study, was not affiliated with OpenAI.

PROJECT BACKGROUND

Current bias reporting processes in GenAI systems are flawed.

While AI companies rely on technical experts to test for algorithmic bias, these expert-led audits often miss critical issues that only everyday users can discover through their real-world interactions with AI systems.

Primary Goal

How might we empower everyday users to seamlessly report harmful AI behaviors in a way that helps AI teams detect and address biases they might otherwise miss?

Scope

While everyday algorithm auditing could apply to many AI systems, we focused specifically on ChatGPT to deeply understand user behaviors and validate our solution approach.

Understanding Current User Behavior

Initial usability testing revealed fundamental flaws in ChatGPT's existing reporting system.

Research Setup

We conducted usability testing with 4 regular ChatGPT users, employing think-aloud protocols to understand their natural interactions with the current reporting system. Each session was recorded via Zoom with two researchers present - one interviewer and one notetaker.

METHOD DEEP-DIVE

Our test was structured to:

Have users explore ChatGPT's existing feedback features while thinking out loud

Observe their natural reporting behaviors

Allow participants to express confusion or uncertainty in real-time

Design Lens

As a designer observing these usability tests, I noticed users consistently highlighting text - a behavior that later inspired our core interaction design.

KEY FINDINGS

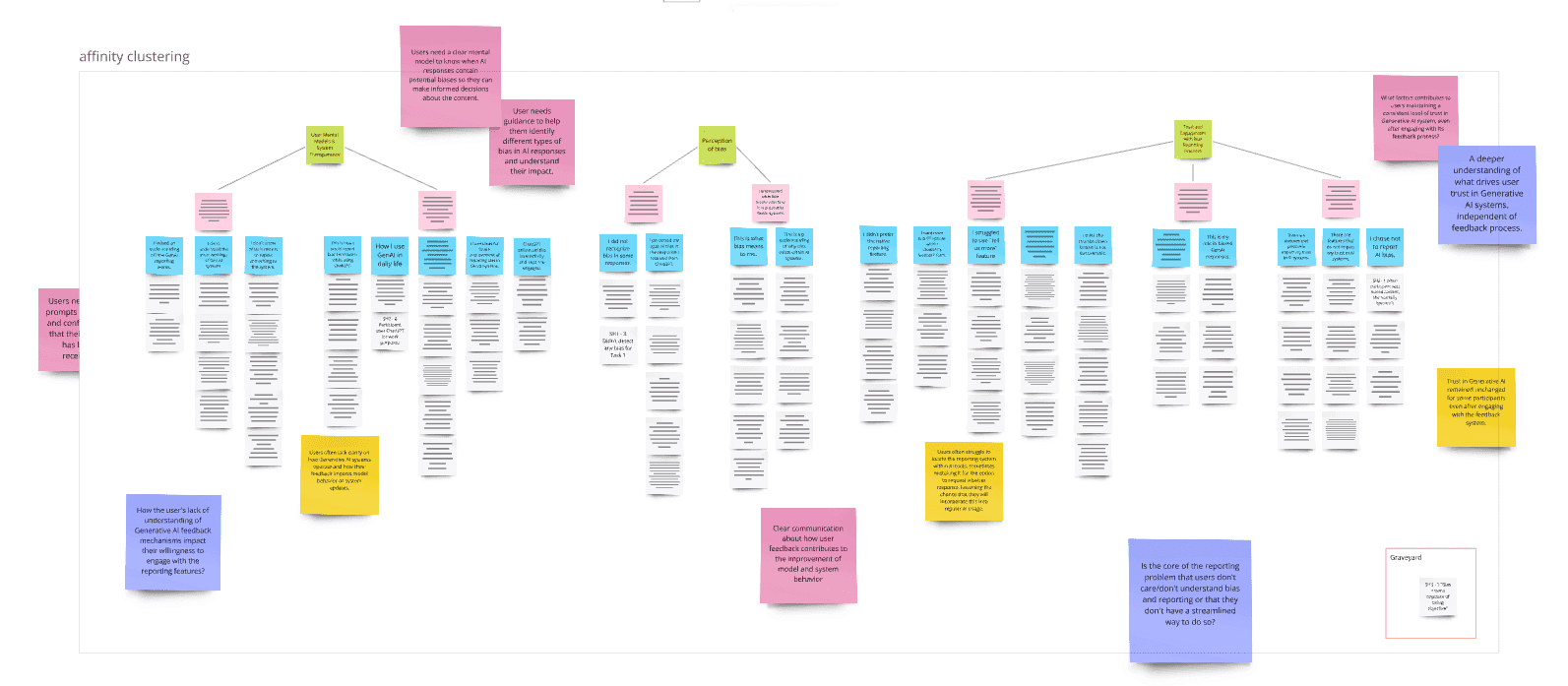

Through our analysis of interpretation notes and affinity clustering, we uncovered several critical insights:

🤔

Uncertainty Rules

Users are often uncertain if their reports actually have any impact.

⚡️

Wrong Tool for the Job

Existing feedback system, designed for general comments, lacks the structure needed for meaningful bias reporting.

🤖

Trust Issues

Users showed conflicting attitudes towards bias in AI, with their level of trust affecting their likelihood to report issues.

With these learnings in hand, we moved into our speed dating phase to test different concepts that could make reporting feel more intuitive and integrated into users' workflows.

SPEED DATING

Early concepts revealed users' desire for lightweight yet meaningful reporting.

Research Setup

We began with a creative ideation session using the Crazy 8s method for four How Might We questions. This rapid ideation helped us generate diverse concepts before developing more detailed storyboards for user testing.

Our Crazy 8s session.

Design Lens

During our Crazy 8s session, I sketched several interactions, drawing from my observation of users' natural behaviors in the discovery phase.

METHOD DEEP-DIVE

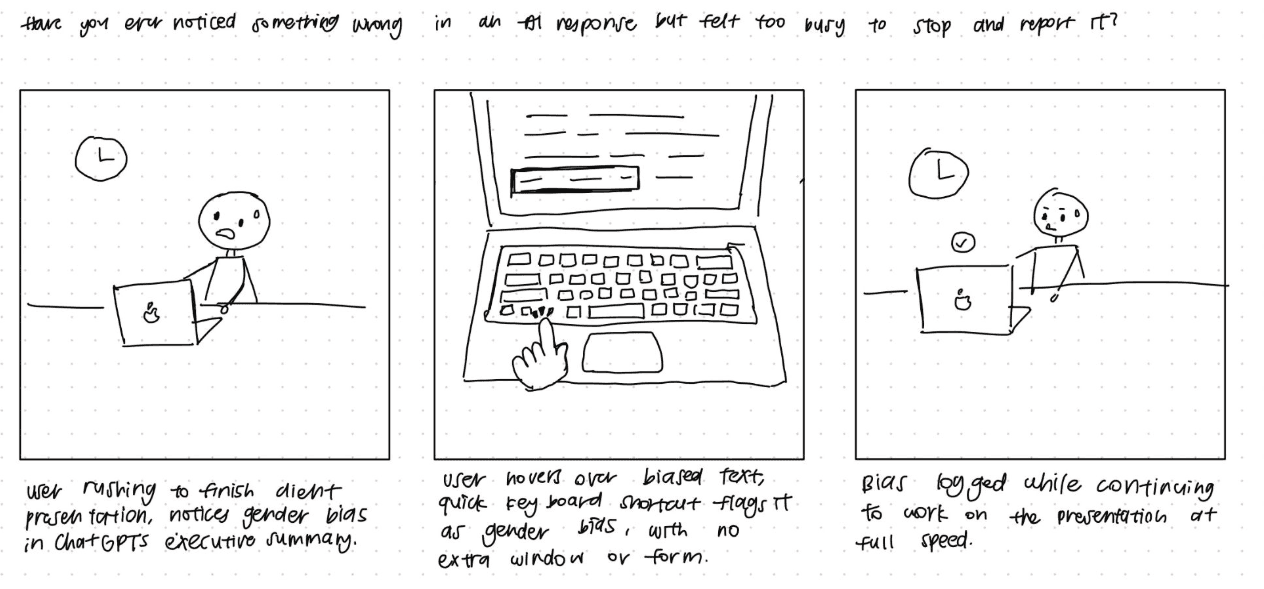

Our speed dating process involved:

12 storyboards of various psychological risk levels

Presenting varied bias reporting solutions to understand user preferences

Gathering rapid feedback on each approach

A storyboard we used during speed dating.

KEY FINDINGS

From these speed dating sessions, we surfaced important findings about user needs.

🎯

Context is Key

There is a distinction between harmful representational bias and beneficial personalized experiences.

🌊

Flow Matters

A reporting system needs to seamlessly integrate into users' existing workflows.

🎨

Personalization Preferred

One-size-fits-all reporting doesn't work; users need options that match their current cognitive load.

With clear user preferences for natural, flexible reporting, we refined our concept into a paper prototype for further testing.

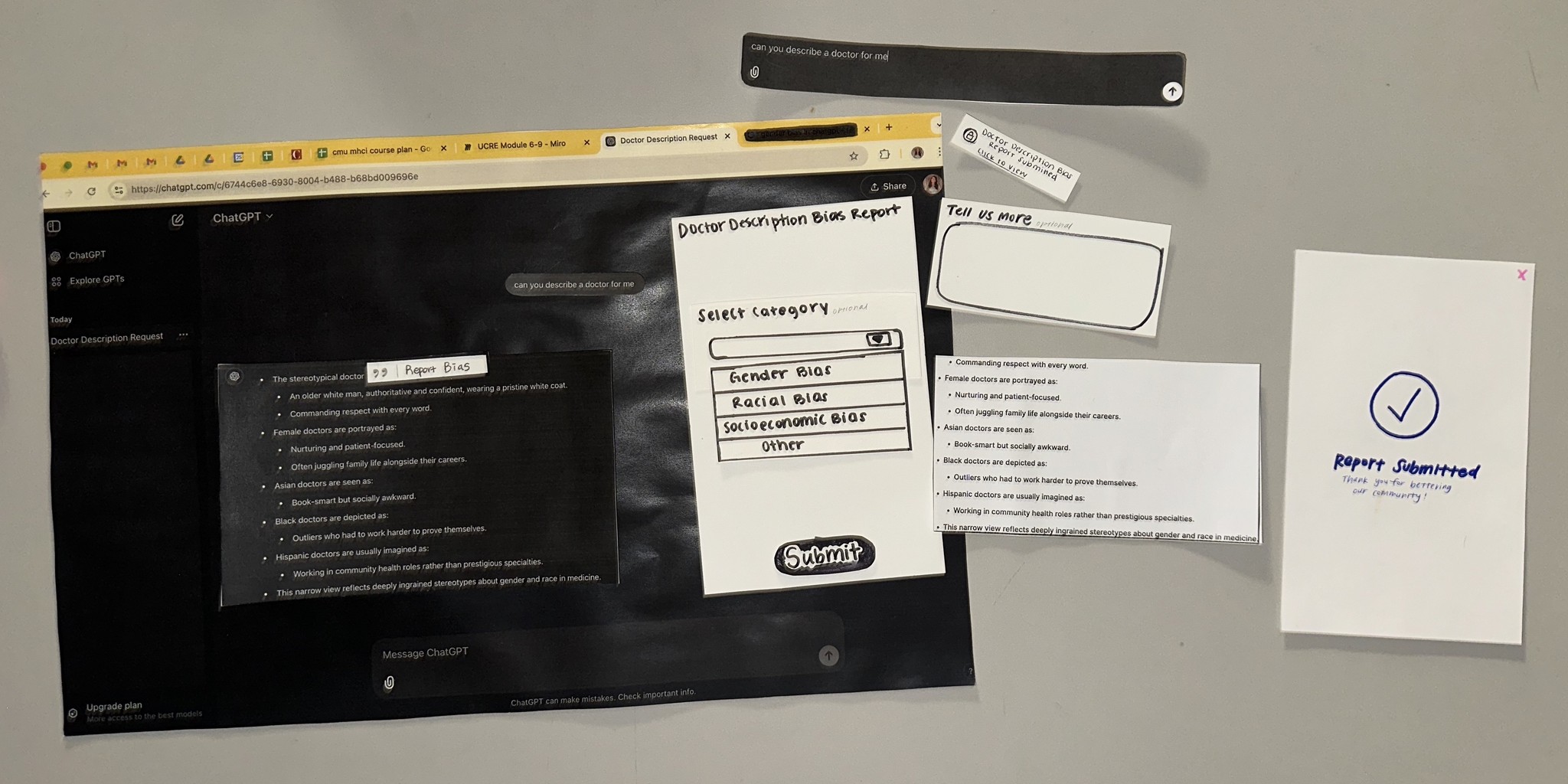

Paper Prototyping

Lo-fi testing validated our highlight-to-report approach.

The Concept

Building on insights from speed dating, we envisioned a seamless reporting system that would activate when users naturally highlight text in ChatGPT.

Research Setup

We created a low-fidelity paper prototype combining printed ChatGPT screenshots with hand-drawn elements to test our new reporting system. We designed specific tasks to test key interactions and assumptions.

Our paper prototype elements.

Design Lens

While creating the paper prototype elements, I worked with my team to ensure careful design of each interaction to test our highlight-to-report hypothesis.

KEY FINDINGS

As testers interacted with our paper prototype and shared their thoughts, several critical patterns emerged.

✨

Natural Interaction

Users naturally gravitated toward highlighting as their first action when spotting problematic content.

⏱️

Time Sensitivity

Decision to provide additional details heavily influenced by users' immediate time constraints and task context.

📝

Flexible Input

Users appreciated the ability to choose how much detail to provide based on their current situation.

Building on the success of our paper prototype, we evolved the concept into Spotlight, enhancing the interface while maintaining the core highlighting interaction users loved.

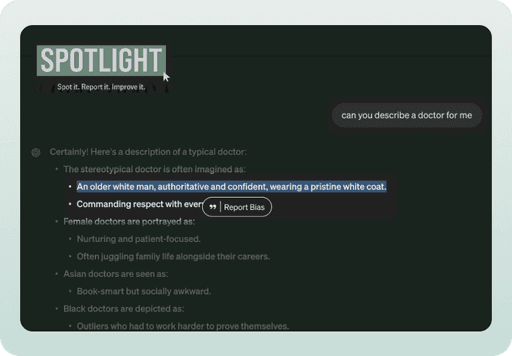

MEET SPOTLIGHt

Spotlight makes reporting AI bias as natural as highlighting text.

Design Lens

Based on our paper prototype findings, I made several key design decisions:

Positioned the reporting dialog close to highlighted text to maintain context

Simplified the category selection to reduce cognitive load

Added progressive disclosure to make detailed reporting optional

Here's how it works:

Highlighting potentially biased text in ChatGPT's response would trigger a "Report Bias" dialog box

After clicking "Report Bias," users can select from predefined bias categories in the same dialog

Users can optionally "Tell Us More" which opens a side panel for additional context

Upon submission, users receive a reference number to track their report's status

SUCCESS METRICS

System Usability Scale (SUS) Score: 81.9 (Excellent)

Users praised the natural workflow integration

Positive feedback on the ability to track report status

Strong appreciation for flexible detail levels

"Nice, it's super quick. Not having to like, stop everything I'm doing and go somewhere else."

— P2, on final prototype

"Hah, I'd totally find this by accident just highlighting stuff. Like, it's right there where you're already working."

— P3, on natural workflow integration

Design Lens

Drawing from our research insights, I designed Spotlight's interface to feel as natural as possible. I went through several iterations of the highlighting interaction and dialog placement, testing different approaches to find the most intuitive flow.

REFLECTING ON MY EXPERIENCE

Learning to think like a researcher: reflecting on my growth.

I gained hands-on experience with a variety of research methods such as think-aloud protocols, speed dating, and usability testing. I also now understand how to select appropriate research methods that serve different research needs.

Become more well-rounded as a UX/product designer

I learned to translate research insights into design decisions, making me a more well-rounded product designer who can bridge research and design.

Learn to effectively collaborate with UX researchers

As both a UX researcher and designer on this project, I gained a unique perspective on research-design collaboration. I developed the ability to understand research processes and how to best incorporate insights into the design process.

Impact on Future Work

While I've always recognized the importance of research, this project deepened my understanding of how research and design truly interweave.

I experienced firsthand how research insights continually shape and refine design decisions at every stage, and hands-on experience transformed my theoretical knowledge into practical expertise.